Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

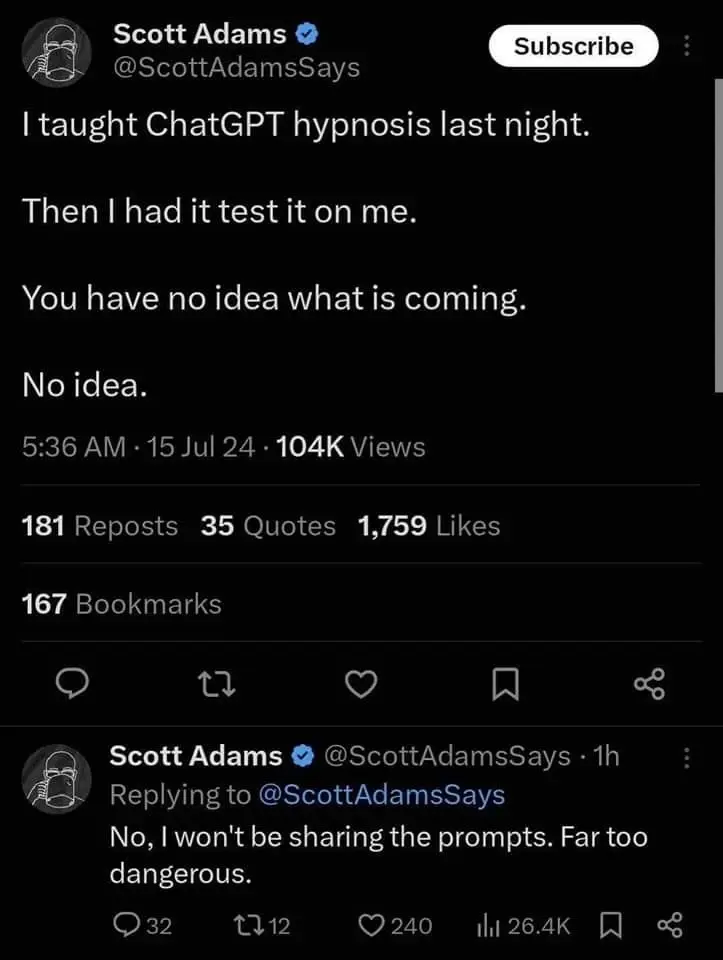

Days since last dangerous humanity ending infohazard discovered by some guy on twitter: 0

did it have sex with him

It told him he was very smart and correct so he had an hypnogasm yes.

(For the people not in the know and who want some more psychic damage, look up Scott and his ‘I can hypnotise you into having orgasms’ blog posts, the man is utterly nuts, and it is really scary how many people seriously follow him).

Looks like he confused “tantrum” with “tantric”

No shame, happens to guys all the time

how the fuck would you explain that to humans who don’t have years of psychic damage

explaining these things to normal humans is how you turn them into humans with years of psychic damage, and I support that mission wholeheartedly

an attempt at a summary:

the dilbert guy, who believes he can hypnotize women into having sex with him, now also believes he knows a magic incantation to teach hypnosis to a chatbot, and heavily implies the chatbot was able to hypnotize him in turn (presumably into having sex with it)

who believes he can hypnotize women into having sex with him

Wait I missed this, he believes what?

Now this is doubly funny.

Content warning for this post, you will get psychic damage.

It is worse, he believes he can hypnotize people over the internet into having orgasms. Here is a link to a screenshot, not sure if I’m able to find a archive link. I think he deleted his blog.

E: a tumblr has archive links but it seems he managed to delete the first post.

Hey, haven’t seen a proof of god’s non-existence today yet, thanks!

extreme psychic damage trap

Submissives, I want you to start planning your New Year’s Eve now. Make sure you have some time alone, or some time with a partner who fully accepts your wonderful nature. But most of all, I want you wet, or hard, and especially obedient, starting now. And I want you to know how much I enjoy putting you in this state of mind. It starts now, but will get more intense by Thursday night. Expect to be a quivering, throbbing, wet mess by then.

How did that make you feel?

Queasy.

This is quite literally “creep discovers dirty-talking, thinks it’s a superpower even though he’s terrible at it”.

EDIT: From Part 3:

So today I am only talking to the perhaps 20% of you who were turned off by this series.

Bwahahahaha! That’s some funny shit mate, I’ve seen many bollocks number straight from between them buttocks, but this is fuckin’ choice.

If you see a train entering a tunnel, think of it as nothing but transportation.

Hey mate, I think… I think that’s what most normal people do? I sometimes imagine an action hero standing on top and fighting a villain before the tunnel slams them in the heads but that’s just me. What do you think about, Scott? Don’t answer that. please

On the plus side, this single image does catch one up pretty quickly

If this wasn’t Scott Adams I’d have assumed this was fetish content. Now I don’t know what to think.

Fetish content for the world’s most divorced man.

Is he more divorced than Elon these days? I didn’t think that would be possible

I saw people making fun of this on (the normally absurdly overly credulous) /r/singularity of all places. I guess even hopeful techno-rapture believers have limits to their suspension of disbelief.

At risk of being NSFW, this is an amazing self-own, pardon the pun. Hypnosis via text only works on folks who are fairly suggestible and also very enthusiastic about being hypnotized, because the brain doesn’t “power down” as much machinery as with the more traditional lie-back-on-the-couch setup. The eyes have to stay open, the text-processing center is constantly engaged, and re-reading doesn’t deepen properly because the subject has to have the initiative to scroll or turn the page.

Adams had to have wanted to be hypnotized by a chatbot. And that’s okay! I won’t kinkshame. But this level of engagement has to be voluntary and desired by the subject, which is counter to Adams’ whole approach of hypnosis as mind control.

Yep, was thinking something similar. Dude just posted a public sscce about the effect addressed in the llmentalist article

(The second c there is loadbearing under original interpretation, I guess)

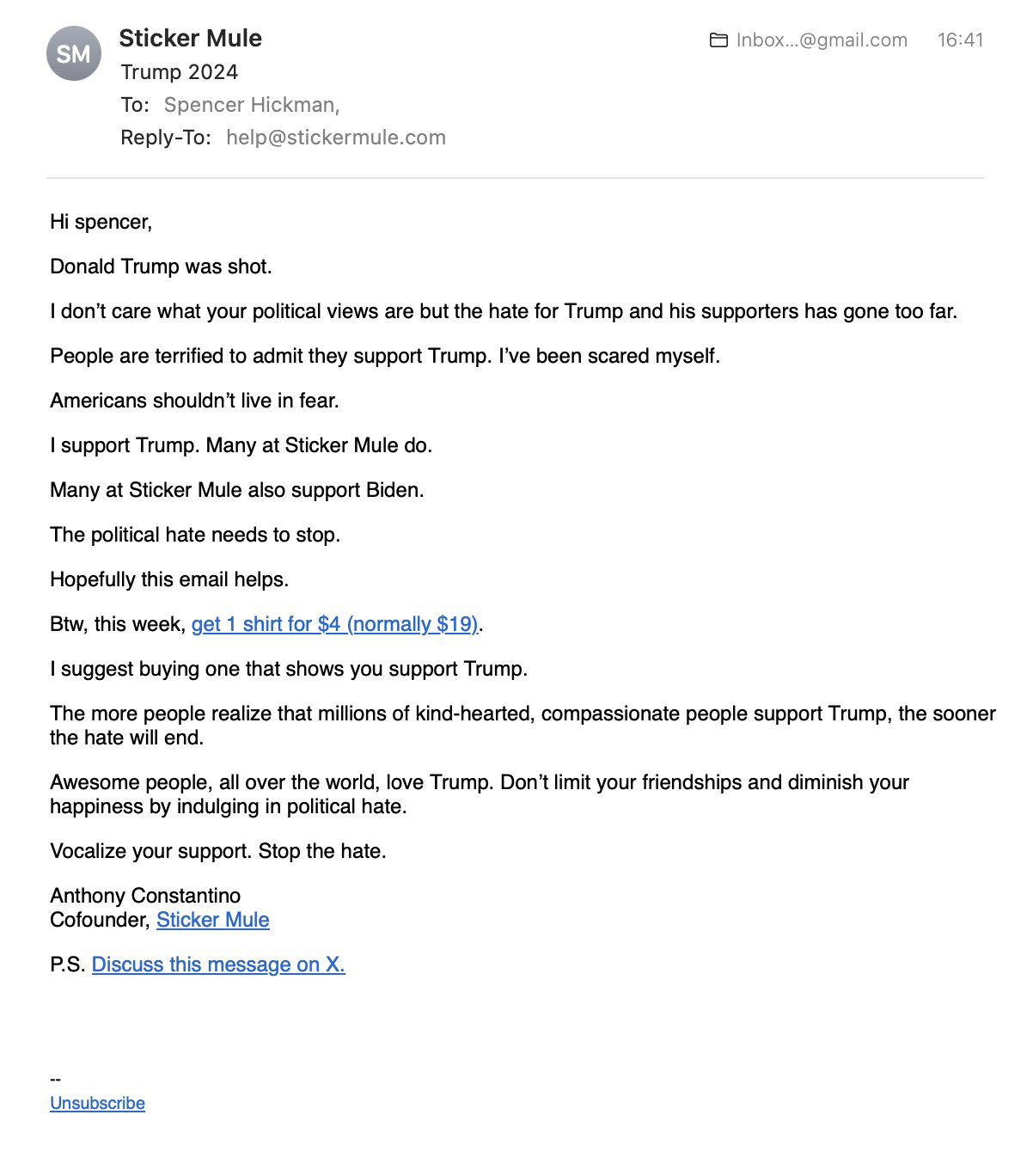

imagine one day buying some shitpost novelty stickers from that one site you heard a friend mention sometime, and then getting them and laughing about it and forgetting it

all too rapidly the years pass: young trees shoot up, older trees start boughing their way past electrical lines, the oldest all already in their position of maximum comfort. whole generations of memes have been born and died. you no longer even get to make fun of your weird aunt for still sending the dancing baby gif (these days it’s all about the autotune clips of a decade ago…)

and then one day you get reminded that the shitpost novelty sticker web store exists by receiving an email from them

what the fuck.

In his twitter thread he’s attempting to troll people in the replies. And not even doing a particularly good job at it. A bold business strategy.

Apparently they also once chained a design into ‘liberal moron’ from what was some political message once in the past, so it isn’t coming from nowhere. Guess it wasn’t an innocent mistake.

yeah stickermule is where I got the bitcoin “it can’t be that stupid” stickers

how annoying

In the spirit of the fondly-remembered “Cloud-to-Butt” plugin, I propose a new tool that transforms mentions of AI and LLMs to something else. But what shape should our word transformer take? While scatology is good shit, I bet we can come up with something more clever than “ChatGPT-to-Poop”

BTW, take 1d6 psychic damage when you realize that Cloud-to-Butt was released more than ten years ago

Edit: Favorite suggestions so far:

-

AI-to-Bob @sc_griffith

-

AI-to-DFE (Dumpster Fire Elemental) @YourNetworkIsHaunted

-

AI-to-Statistics (HN user bitwize - https://news.ycombinator.com/item?id=40024452)

Colonizer Clippy

@o7___o7 @dgerard cloud-to-butt, you say? https://mastodon.me.uk/@pikesley/111007434026223446

Haha that’s a fantastic trophy to have :D

That must have been a glorious day!

ChatGPT-to-third world employee? Bit too long, we prob can find a non-offensive shorter word for that.

ChatGPT to Orphan Crushing Machine?

global southerner? edit: nah I don’t like it.

We could ask a LLM and see what they want us to call them. ;)

a guy named Bob

Hey, this could work…Bob is short and punchy, very butt-like!

AI->Bob

LLM->Robert

Specific Products->Random selection from (Bobby, Bobert, Robby, Boberino, Roberto, Bert, etc)

It’s difficult thinking of something better than butt, or even anything that isn’t butt-adjacent (poop sandwich is my goto)

My pitch would be something garbage related. Hot wet trash, maybe?

Garbage Juice?

Ok my real pitch is: something that can have agency, so that when the word substitution happens, you still have a parseable sentence, most of the time. Take “Garbage Ape” (from the heathcliff comics I guess), for example:

- “I am a Garbage Ape ethicist”

- “It’s Garbage Ape Welfare discussion week”

- “I prompted a Garbage Ape to generate these image”

- “I think Garbage Apes pose a serious existential threat”

- “We’re proud to announce our new Garbage Ape, Steve”

Though it’s hard to pick a thing that conjures the right image.

Dumpster Fire Elemental?

C:// Monkey?

NPNC (Nonplayer Noncharacter)

Dumpster Fire Elemental is great. Could be shortened to just Dumpster Elemental. Then you could have DFE/DE for AGI/AI. But also just DFE in full for everything is good too.

The rest are good but I think a little too in the neighbourhood of AI for me- I want to forget about this substitution, then later encounter an article talking about how dumpster fire elementals are consuming more energy per day than all of europe and wonder what the hell is going on, before remembering I have the plugin installed. But that’s just my stupid opinion :)

DFE just made me think up Directed Finite Generata as a thing and now I am absolutely going to use it on some promptfondlers sometime to make them itch

ooh, those first and last ones have potential!

Do Garbage Apes dream of electric slurp juice?

Followup pitch: Cvnty Regirock: https://knowyourmeme.com/memes/cunty-regirock-with-a-handbag

thinking “typewriter monkeys”, but maybe that’s too literal

Piss Juicebox (ok that is scatological, this is SO HARD)

My candidates, in no particular order:

- crap

- bullshit

- bollocks

-

maybe we should have a social/off topic thread too

Ah yes, Alexander’s unnumbered hordes, that endless torrent of humanity that is all but certain to have made a lasting impact on the sparsely populated subcontinent’s collective DNA.

edit: Also, the absolute brain on someone who would think that before entertaining a random recent western ancestor like a grandfather or whateverthefuckjesus.

No, it wasn’t a massive impact by numbers but that superior euro genetics has been floating around waiting to be born like the Kwasitz Haderach.

probably a mix of early Macedonian invasions.

Like his mom?

invasions.

inviasions. Sounds better in Makadonian.

AI Maxers Thrilled with Trump’s Vice President Pick JD Vance

This isn’t really too interesting yet; but something to keep an eye on. As things like blockchain and AI alignment becomes weirdly political it’s likely that sneering will get unpleasantly close to politics at times. And yet sneer we must.

Other self-titled techno-optimists highlighted Vance’s ties to venture capital, Thiel, and Andreessen, saying the “Gray Tribe [is] in control.” Gray Tribe is a reference to a term originating from Scott Alexander’s Slate Star Codex blog, which points to a group that is neither red (Republican) or Blue (Democrat), but a libertarian, tech savvy alternative.

I really should write more about technofascism while I still have electricity, clean water, a relatively unfractured global information network, and there aren’t too many gunshots outside

deleted by creator

I have an extremely rough draft up on our writing community MoreWrite: here it is. it very much deserves to be restructured and expanded; I wrote this first draft in a hurry, and since then I’ve both gotten some very solid recommendations for prior work in the area to read and cite from @[email protected] and I’ve experienced more instances where technofascist methods were used to take control over open source projects away from their communities.

oh I didn’t realize this sub existed, shoulda put my old essay there instead. mb

nah all good! our writing community isn’t meant to be containment, it’s just a different category with different expectations (mainly that feedback should be constructive, since MoreWrite is for writers posting their own work)

But but, tracingwood told us that claiming NRx is close to Rationalism is a lie made up by the evil David G.

God damn, I don’t think I’ve read an article with that many name drops in a while. It’s like a Marvel film but with techfash assholes.

“JD Vance is pro-OSS [open source] AI,” Verdon tweeted. “We are so unfathomably back.”

It’s actually impressive how this guy is able to make me despise him even more every single time he opens his mouth.

Dingus McGee, VP is also a big fan of Yarvin,

it’s all politics? // it always was

Possibly the worst misunderstanding of quantum mechanics I’ve ever seen. I have no idea how anyone managed to convince themselves that the laws of physics are somehow different for conscious observers.

Can an observer be a single photon, or does it have to be a conscious human being?

The former. I’m glad we can stop the article right there and go home.

What the fuck is this question even? What the fuck is “conscious”? Do you think in the double-slit experiment we closed a guy inside the box to watch?

Under this, let’s charitably call it, “interpretation”, the Schrödinger cat analogy makes no sense, surely THE CAT is bloody conscious about ITSELF BEING ALIVE??

there’s so much quantum woo in that article I want to sneer at, but I don’t know anywhere close to enough about quantum physics to do so without showing my entire ass

To me, the most sneerable thing in that article is where they assume a mechanical brain will evolve from ChatGPT and then assume a sufficiently large quantum computer to run it on. And then start figuring out how to port the future mechanical brain to the quantum computer. All to be able to run an old thought experiment that at least I understood as highlighting the absurdity of focusing on the human brain part in the collapse of a wave function.

Once we build two trains that can run near the speed of light we will be able to test some of Einstein’s thought experiments. Better get cracking on how we can get enough coal onboard to run the trains long enough to get the experiments done.

Well a good thing to remember re quantum mechanics, Schrödinger Cat is intended as a thought experiment showing how dumb the view on QM was. So it is always a bit funny to see people extrapolate from that thought experiment without acknowledging the history and issues with it. (But I think that also depends on the various interpretations, and this means I’m showing a cheekily high amount of ass here myself).

Pretty much any mention of a thought experiment in the wild gets my hackles up. “Isn’t it cool that the cat is alive and dead at the same time?” Shut up! Shut up shut up shut up!!! Tho to be honest it might just be schrodinger’s cat that comes up. I wish they’d leave the poor cat alone, and stop trying to poison it.

I have a whole series of rants about that cat, starting with how it doesn’t illuminate anything about quantum theory specifically — as opposed to probabilistic or stochastic theories in general — and culminating in “Hey, maybe we should stop naming things after pedo creeps.”

Not surprised that a guy who thinks about poisoning cats is a creep!

What really gets me is that we never look past Schrödinger’s version of the cat. I want us to talk about Bell’s Cat, which cannot be alive or dead due to a contradiction in elementary linear algebra and yet reliably is alive or dead once the box opens. (I guess technically it should be “alive”, “dead”, or “undead”, since we’re talking spin-1 particles.)

There are some interesting ideas in that general direction (wrapping Bell inequalities within different new types of thought experiment, etc.), but some of the people involved have done rather a lot of overselling, and now bringing in talk of “AI” just obscures the whole situation. Which was already obscure enough.

If you want a serious discussion of interpretations of quantum mechanics, here is a transcript of a lecture “Quantum Mechanics in Your Face” which has the best explanation I’ve ever seen. I’d recommend the first 6 of Peter Shor’s Quantum Computation notes (don’t worry they’re each very short) for just enough background to understand the transcript.

awesome! these are going straight to the list of things I should be reading

According to one story at least, Wigner eventually concluded that if you take some ideas that physicists widely hold about quantum mechanics as postulates and follow them through to their logical conclusion, then you must conclude that there is a special role for conscious observers. But he took that as a reason to question those assumptions.

(That story comes from Leslie Ballentine reporting a conversation with Wigner in the course of promoting an ensemble interpretation of QM.)

Also there’s a book by Stephen Baxter set in his Xeelee universe which takes this premise for the cult mentality of a terrorist cell

Sorry this doesn’t really add anything, just thought it kinda funny

Yes, the problem with quantum mechanics is it’s not just your Deepak Chopras of the world that get sucked into quantum woo, but even a lot of respectable academics with serious credentials, thus giving credence to these ideas. Quantum mechanics is a context-dependent theory, the properties of systems are context variant. It is not observer-dependent. The observer just occupies their own unique context and since it is context-dependent, they have to describe things from their own context.

It is kind of like velocity in Galilean relativity, you have to take into account reference frame. Two observers in Galilean relativity could disagree on certain things, such as the velocity of an object but the disagreement is not “confusing” because if you understand relativity, you’d know it’s just a difference in reference frame. Nothing important about “observers” here.

I do not understand what is with so many academics in fully understanding that properties of systems can be variant under different reference frames in special relativity, but when it comes to quantum mechanics their heads explode trying to interpret the contextual nature of it and resort to silly claims like saying it proves some fundamental role for the conscious observer. All it shows is that the properties of systems are context variant. There is nothing else.

Once you accept that, then everything else follows. All of the unintuitive aspects of quantum mechanics disappear, you do not need to posit systems in two places at once, some special role for observers, a multiverse, nonlocality, hidden variables, nothing. All the “paradoxes” disappear if you just accept the context variance of the states of systems.

I honestly think anyone who writes “quantum” in an article should be required to take a linear algebra exam to avoid being instantly sacked

speaking of technofascism, we’re at the stage where supposed Democrat billionaires like the Andreesen Horowitz fuckers suddenly come out in support of Trump:

Marc Andreessen, the co-founder of one of the most prominent venture capital firms in Silicon Valley, says he’s been a Democrat most of his life. He says he has endorsed and voted for Bill Clinton, Al Gore, John Kerry, Barack Obama and Hillary Clinton.

However, he says he’s no longer loyal to the Democratic Party. In the 2024 presidential race, he is supporting and voting for former President Donald Trump. The reason he is choosing Trump over President Joe Biden boils down primarily to one major issue — he believes Trump’s policies are much more favorable for tech, specifically for the startup ecosystem.

none of this should be surprising, but it should be called out every time it happens, and we’re gonna see it happen a lot in the days ahead. these fuckers finally feel secure in taking their masks off, and that’s not good.

Apparently the “startup ecosystem” matters more than the ecosystem of, you know, actual living things.

These people are just amazingly fucking evil.

Democrat?? AH’s previous hit was the one where they enthusiastically endorsed literally the co-author of the original Fascist manifesto

and a16z does get Yarvin in to dispense wisdom and insight

See, I feel like the Democrats have had a pretty strong technocrat wing that is much more in synch with Neoreaction than people care to acknowledge. As the right shifts towards pursuing the pro-racist anti-women anti-lgbt aspects of their agenda through the courts rather than the ballot box, it seems like the fault lines between the technocratic fascists and the theocratic fascists are thinner than the lines between the techfash and the progressives.

I don’t understand why people take him at face value when he claims he’s always been a Democrat up until now. He’s historically made large contributions to candidates from both parties, but generally more Republicans than Democrats, and also Republican PACs like Protect American Jobs. Here is his personal record.

Since 2023, he picked up and donated ~$20,000,000 to Fairshake, a crypto PAC which predominantly funds candidates running against Democrats.

Has he moved right? Sure. Was he ever left? No, this is the voting record of someone who wants to buy power from candidates belonging to both parties. If it implies anything, it implies he currently finds Republicans to be corruptible.

HN: I am starting an AI+Education company called Eureka Labs.

Their goal: robo-feynman:

For example, in the case of physics one could imagine working through very high quality course materials together with Feynman, who is there to guide you every step of the way. Unfortunately, subject matter experts who are deeply passionate, great at teaching, infinitely patient and fluent in all of the world’s languages are also very scarce and cannot personally tutor all 8 billion of us on demand. However, with recent progress in generative AI, this learning experience feels tractable.

NGL though mostly just sharing this link for the

concept artconcept fart which features a three-armed many fingered woman smiling at an invisible camera.

But just focus on the vibes. This diverse group of young mutants getting an education in the overgrown ruins of this university.

Not sure how it ties into robo-feyman at all but the vibes

smh they gentrified Genosha

For example, in the case of physics one could imagine working through very high quality course materials together with Feynman

Women of the world: um, about that

Others: unslanted solar panels at ground level in shade under other solar panels, 90-degree water steps (plural), magical mystery staircases and escalator tubes, picture glass that reflects anything it wants to instead of what may actually be in the reflected light path, a whole Background Full Of Ill-Defined Background People because I guess the training set imagery was input at lower pixel density(??), and on stage left we have a group in conversation walking and talking also right on the edge of nowhere in front of them

And that’s all I picked up in about 30-40s of looking

Imagine being the kind of person who thinks this shit is good

So that’s what our kids will look like once society rebuilds after global thermonuclear war!!!

I’m sure they will thank us once we explain that the alternative was GPT-5.

jumping off a roof with an umbrella for a parachute feels tractable

We should neoligise “NASB” for our community as shorthand for “Not a sneer, but”

Does anyone here know what Justine Tunney’s deal is? I’d been following her redbean project for a time but came across an article that left me rather startled

she is, regrettably, a fascist who reputedly still hides right-wing conspiracy shit in her projects

I unfortunately know that startled feeling well; I used to be a fan of the work she did with sectorlambda and similar projects

well that’s disappointing :( it sucks when terrible people do cool things

Tragic: The worst person you know developed a succinct alternative to Wordpress.

A friend who worked with her is sympathetic to her but does not endorse her: this is a tendency she has, she veers back and forth on it a lot, she has frequent moments of insight where she disavows her previous actions but then just kind of continues doing them. It’s Kanye-type behavior.

yeah, straight up alt-right techfash and long has been

comment from friend:

Slightly related: now I know when the AI crash is going to happen. Every bottomfeeder recruiter company on LinkedIn is suddenly pushing 2-month contract technical writer positions with AI companies with no product, no strategy, and no idea of how to proceed other than “CEO cashes out.” I suspect the idea is to get all of their documentation together so they can sell their bags of magic beans before the beginning of the holiday season.

sickos.jpg

I have asked if he can send me links to a few of these, I’ll see what I can do with 'em

That seems suspiciously soon, but my impression is based on nothing but vibes — a sense that companies are still buying in.

I think there was a report saying that the most recent quarter still showed a massive infusion of VC cash into the space, but I’m not sure how much of that comes from the fact that a new money sink hasn’t yet started trending in the valley. It wouldn’t surprise me if the griftier founders were looking to cash out before the bubble properly bursts in order to avoid burning bridges with the investors they’ll need to get the next thing rolling.

yeah, i think this is a last gasp or a second-last gasp.

Ed Zitron says it’ll burn by end of the year, but he doesn’t list sources either so idk

We were asking around AI industry peons in March and they all guessed around three quarters too. I woulda put it at maybe two years myself, but I was surprised at so many people all arriving at around three quarters. OTOH, I would say that just in the past few months things are really obviously heading for a trauma.

Perhaps that’s part of why so many SV types are backing Trump. Grifting off Trump may be their fallback after the AI bubble collapses.

Put me down for “doesn’t think it will end.” Did crypto end?

crypto’s VC investment fell off a cliff after the crash, and that investment is what we were talking about there

hence their pivot to AI

Oh, OK. I think all the VC-adjacent people still really believe in crypto, if it helps. They probably also don’t believe in it, depending on the room. I think it will come back.

they’ve stopped putting fresh money in, but they believe fervently in the massive bags they’re holding

I’m severely backlogged on catching up to things but my (total and complete) guess would be something like: all the recent headlines about funding and commitments are almost certainly imprecise in localisation and duration - everyone that “got money” didn’t necessarily get “money” but instead commitments to funding, and “everyone” is a much smaller set of entities that don’t encompass a really wide gallery of entities

So for all the previously-extant promptfondlers/ model dilettantes/etc out there, the writing may indeed have been (and may still be) on the wall ito runway (“startup operating capital remaining available and viable to avoid death”)

Based on the kind of headlines seen (and presuming the above supposition for the sake of argument), and the kind of utterly milquetoast garbage all the interceding months have produced, I don’t think it’s likely that much of the promised money will make it through to this layer/lot either. But that’s entirely a guess at this stage (and I can think of some fairly hefty counter-argument examples that may contribute to countering, not least because of how many people/orgs wouldn’t want to be losing face to fucking this up)

Current flavor AI is certainly getting demystified a lot among enterprise people. Let’s dip our toes into using an LLM to make our hoard of internal documents more accessible, it’s supposed to actually be good at that, right? is slowly giving way to “What do you mean RAG is basically LLM flavored elasticsearch only more annoying and less documented? And why is all the tooling so bad?”

“What do you mean RAG is basically LLM flavored elasticsearch only more annoying and less documented? And why is all the tooling so bad?”

Our BI team is trying to implement some RAG via Microsoft Fabrics and Azure AI search because we need that for whatever reason, and they’ve burned through almost 10k for the first half of the running month already, either because it’s just super expensive or because it’s so terribly documented that they can’t get it to work and have to try again and again. Normal costs are somewhere around 2k for the whole month for traffic + servers + database and I haven’t got the foggiest what’s even going on there.

But someone from the C suite apparently wrote them a blank check because it’s AI …

Confucius, the Buddha, and Lao Tzu gather around a newly-opened barrel of vinegar.

Confucius tastes the vinegar and perceives bitterness.

The Buddha tastes the vinegar and perceives sourness.

Lao Tzu tastes the vinegar and perceives sweetness, and he says, “Fellas, I don’t know what this is but it sure as fuck isn’t vinegar. How much did you pay for it?”

RAG

The fuck’s a rag in an AI context

so, uh, you remember AskJeeves?

(alternative answer: the third buzzword in a row that’s supposed to make LLMs good, after multimodal and multiagent systems absolutely failed to do anything of note)

It’s the technique of running a primary search against some other system, then feeding an LLM the top ~25 or so documents and asking it for the specific answer.

So you run a normal query but then run the results through an enshittifier to make sure nothing useful is actually returned to the user.

NSFW (including funny example, don't worry)

RAG is “Retrieval-Augmented Generation”. It’s a prompt-engineering technique where we run the prompt through a database query before giving it to the model as context. The results of the query are also included in the context.

In a certain simple and obvious sense, RAG has been part of search for a very long time, and the current innovation is merely using it alongside a hard prompt to a model.

My favorite example of RAG is Generative Agents. The idea is that the RAG query is sent to a database containing personalities, appointments, tasks, hopes, desires, etc. Concretely, here’s a synthetic trace of a RAG chat with Batman, who I like using as a test character because he is relatively two-dimensional. We ask a question, our RAG harness adds three relevant lines from a personality database, and the model generates a response.

> Batman, what's your favorite time of day? Batman thinks to themself: I am vengeance. I am the night. Batman thinks to themself: I strike from the shadows. Batman thinks to themself: I don't play favorites. I don't have preferences. Batman says: I like the night. The twilight. The shadows getting longer.

Maybe hot take, but I actually feel like the world doesn’t need strictly speaking more documentation tooling at all, LLM / RAG or otherwise.

Companies probably actually need to curate down their documents so that simpler thinks work, then it doesn’t cost ever increasing infrastructure to overcome the problems that previous investment actually literally caused.

Companies probably actually need to curate down their documents so that simpler thinks work, then it doesn’t cost ever increasing infrastructure to overcome the problems that previous investment actually literally caused

Definitely, but the current narrative is that you don’t need to do any of that, as long as you add three spoonfulls of AI into the mix you’ll be as good as.

Then you find out what you actually signed up for is to do all the manual preparation of building an on-premise search engine to query unstructured data, and you still might end up with a tool that’s only slightly better than trying to grep a bunch of pdfs at the same time.

What do you mean RAG is basically LLM flavored elasticsearch

I always saw it more as LMGTFYaaS.

deleted by creator

NSFW, as NSAB, I know that anti-environmentalists shout a lot about ‘what about china china should go green first!’ while not knowing china is in fact doing a lot to try and go green (at least on the co2 energy front, I’m not asking here to go point out all the bad things china does to fuck up the environment). I see ‘we should develop AI before china does so’ be a big pro AI argument, so here is my question. Is china even working on massive A(G)I like the people claim?

I am overall very uninformed about the chinese thechnological day-to-day, but here’s two interesting facts:

They set some pretty draconian rules early on about where the buck stops if your LLM starts spewing false information or (god forbid) goes against party orthodoxy so I’m assuming if independent research is happening It doesn’t appear much in the form of public endpoints that anyone might use.

A few weeks ago I saw a report about chinese medical researchers trying use AI agents(?) to set up a virtual hospital in order to maybe eventually have some sort of a virtual patient entity that a medical student could work with somehow, and look how many thousands of virtual patients our handful of virtual doctors are healing daily, isn’t it awesome folks. Other than the rampant startupiness of it all, what struck me was that they said they had chatgpt-3.5 set up up the doctor/patient/nurse agents, i.e. they used the free version.

So, who knows? If they are all-in in AGI behind the scenes they don’t seem to be making a big fuss about it.

Thanks, you and froztbytes reply answered my questions on if they were doing it and also a bit on how seriously they are all taking it.

Typing from phone, please excuse lack of citations. Academic output in various parts of ML research have increasingly come from China and Chinese researchers over the past decade. There’s multiple inputs to this - funding, how strong a specific school/research centre is, etc, but it’s been ramping up. Pretty sure part of this is one of the fuel sources in keeping the pro-hegemonist US argument popular and going lately (also part of where the “we should before they do” comes from I guess)

I’ve seen some mentions of recent legislation direction about LLM usage but I’m not fully up to scratch on what it is, haven’t had the time to read up

Thanks I was not aware, so they are doing things regarding the research at least. So the “concern” isn’t totally made up. Which is what I wanted to know. As Architeuthis mentioned the legislation is against false info and against going against the party (which seem to be what you could expect).

This isn’t a sneer, just want to share this enjoyable presentation about tech and nihilism by Assoc Professor Nolen Gertz at the University of Twente here in the Netherlands https://iai.tv/video/nihilism-and-the-meaning-of-life-nolen-gertz

Not a sneer, but an observation on the tech industry from Baldur Bjarnason, plus some of my own thoughts:

I don’t think I’ve ever experienced before this big of a sentiment gap between tech – web tech especially – and the public sentiment I hear from the people I know and the media I experience.

Most of the time I hear “AI” mentioned on Icelandic mainstream media or from people I know outside of tech, it’s being used as to describe something as a specific kind of bad. “It’s very AI-like” (“mjög gervigreindarlegt” in Icelandic) has become the talk radio short hand for uninventive, clichéd, and formulaic.

Baldur has pointed that part out before, and noted how its kneecapping the consumer side of the entire bubble, but I suspect the phrase “AI” will retain that meaning well past the bubble’s bursting. “AI slop”, or just “slop”, will likely also stick around, for those who wish to differentiate gen-AI garbage from more genuine uses of machine learning.

To many, “AI” seems to have become a tech asshole signifier: the “tech asshole” is a person who works in tech, only cares about bullshit tech trends, and doesn’t care about the larger consequences of their work or their industry. Or, even worse, aspires to become a person who gets rich from working in a harmful industry.

For example, my sister helps manage a book store as a day job. They hire a lot of teenagers as summer employees and at least those teens use “he’s a big fan of AI” as a red flag. (Obviously a book store is a biased sample. The ones that seek out a book store summer job are generally going to be good kids.)

I don’t think I’ve experienced a sentiment disconnect this massive in tech before, even during the dot-com bubble.

Part of me suspects that the AI bubble’s spread that “tech asshole” stench to the rest of the industry, with some help from the widely-mocked NFT craze and Elon Musk becoming a punching bag par excellence for his public breaking-down of Twitter.

(Fuck, now I’m tempted to try and cook up something for MoreWrite discussing how I expect the bubble to play out…)

The active hostility from outside the tech world is going to make this one interesting, since unlike crypto this one seems to have a lot of legitimate energy behind it in the industry even as it becomes increasingly apparent that even if the technical capability was there (e.g. the bullshit problems could be solved by throwing enough compute and data at the existing paradigm, which looks increasingly unlikely) there’s no way to do it profitably given the massive costs of training and using these models.

I wonder if we’re going to see any attempts to optimize existing models for the orgs that have already integrated them in the same way that caching a web page or indexing a database can increase performance without doing a whole rebuild. Nvidia won’t be happy to see the market for GPUs fall off, but OpenAI might have enough users of their existing models that they can keep operating even while dramatically cutting down on new training runs? Does that even make sense, or am I showing my ignorance here?

Write it! The time is right