Highlights: The White House issued draft rules today that would require federal agencies to evaluate and constantly monitor algorithms used in health care, law enforcement, and housing for potential discrimination or other harmful effects on human rights.

Once in effect, the rules could force changes in US government activity dependent on AI, such as the FBI’s use of face recognition technology, which has been criticized for not taking steps called for by Congress to protect civil liberties. The new rules would require government agencies to assess existing algorithms by August 2024 and stop using any that don’t comply.

Whats your definition of AI here, cause I legit can’t tell at this point. Are we talking algorithmic learning or are we just talking basic non-learning software that people call AI but is basically just a general improvement on say stabilizing video in a recording?

AI is a layname the field of deep learning. Systems that use data to create a function that performs any task.

There are other branches of machine learning but deep learning with neural nets blows them out of the water when it comes to “pushing the envelope”, and aren’t really what people think of when they think of AI- even if most use cases really don’t need it.

I’d be against regulating those algorithms as well, but they’re so boring nobody really cares and they’ll always be available open source.

Even chat GPT and other LLMs are not learning as you use them. A really big and growing fraction of stuff your computer does depends more and more on trained neural nets.

Im aware of the fact their trained on neural net and yada yada, im just fucken tired and have hit the shit at words stage.

Also I realized that most of the focus for these laws and whatnot are probably on advertisement AI or atleast use in that way and maybe self driving cars. Training an AI to do open heart surgery probably aint on the chopping block.

Which makes me wonder if I should’ve opened with that point in my initial comment. Regardless I do think you’re overreacting a bit on the political front. Frankly slowing R&D down a bit can be good a lot of folks rush shit and it redults in damages due to immature tech. As bad as the dems can be remember the best of the republicans are allied with technofeudal libertarians and christofascists. Dont let perfection be the enemy of good enough, if the price to pay for long term stability is slower R&D so be it. Rome lasted 2000 years by being slow and meticulous, Alexander the greats empire lasted a whole 5 minutes after his death cause he rushed the whole damned process.

Now im gonna go get food from taco bell before I get philisophical and insufferable, I like being a redneck bezerker not a fucking SoCal Socrates.

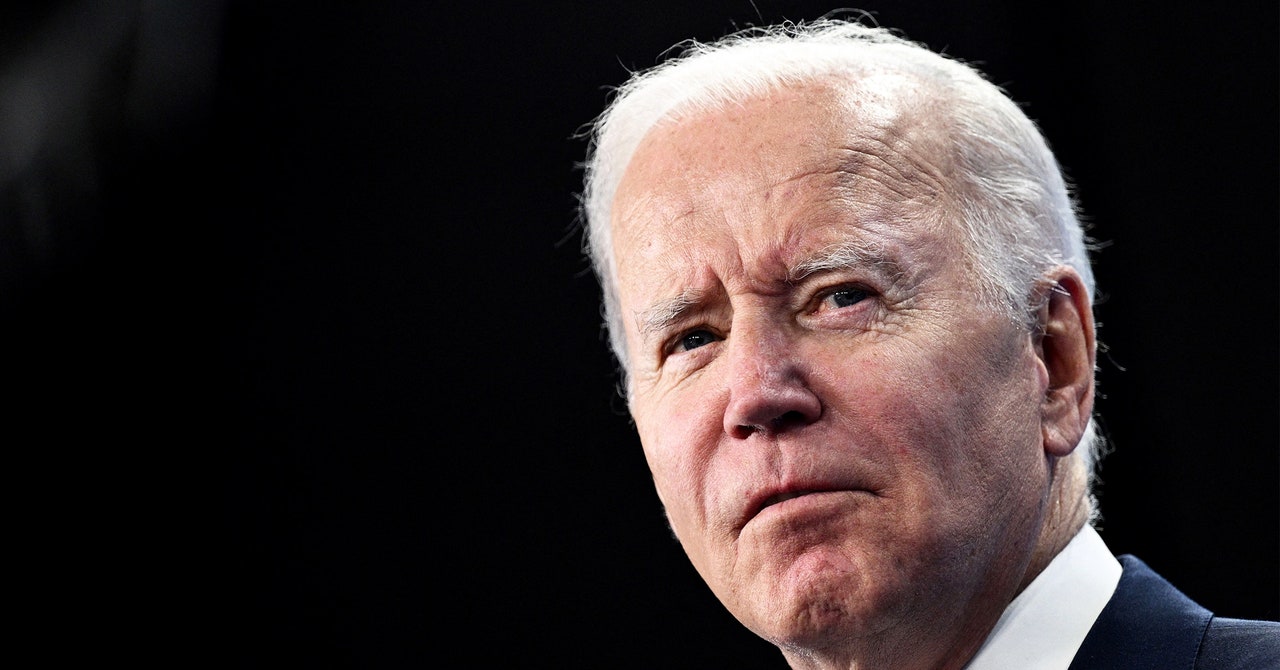

Biden hasn’t crossed the line yet. I’m not reacting at all, just cautious and making my opinion clear as a way to (ever so slightly) encourage further regulation not to happen.

It’s not slowing development. They’re making sure the common person doesn’t have access to it. They are going to ensure only big companies see the benefit.