Also, the bigger problem is buried at the bottom of the article:

As for water usage, data centers consumed 21.2 billion liters of water in 2014. That hit 66bn in 2023, with 84 percent of that going to hyperscale data centers. Hyperscalers alone are expected to consume between 60 and 124 billion liters in 2028.

We are running out of fresh water as it is.

https://abcnews.go.com/US/parts-america-water-crisis/story?id=98484121

We can potentially find new energy sources or find ways to make our current ones much more efficient. Finding new sources of fresh water is much harder. We can’t make the amount of water our bodies need and our crops need more efficient.

21.2 billion litres of water

fresh water

Are we sure it’s fresh? Are we excluding projects like data centers with seaside cooling loops?

Do you think if you exclude those it isn’t still a major fresh water wastage issue during a water crisis?

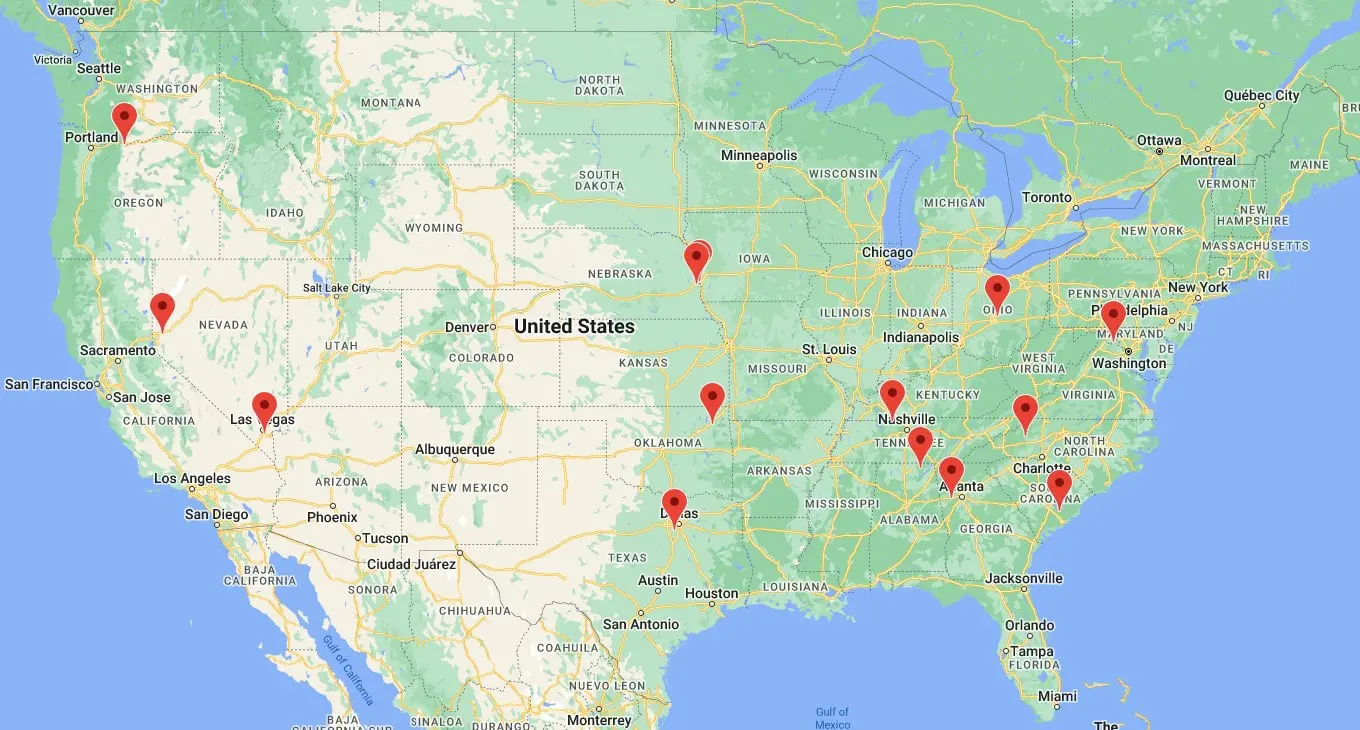

Here’s where just Google’s data centers are. How many of do those do you think are cooled with sea water?

Edit: Yes really. They have a DATA CENTER in LAS VEGAS.

They have a few, and some heavily armed security in some of them as well. I had a tour of one when a company I worked for was looking for a secondary DC. Bulletproof glass, assault rifles, body scanners, and a few other nutty things. Those gambling establishments have to store their data somewhere.

Isn’t the whole point of a data center that you don’t need to be near one to store your data?

Depends on the data. Your family photos and home videos from the 90s you digitized doesn’t matter where it is. If it is financial data or a stock market exchange then speed and thus nearness play a big factor.

Then I guess the only option is to shut down Las Vegas and never go back. I’d be fine with that.

Web performance is interesting, if you like that nerdy stuff. More than half of people will abandon a website at initial startup at the 3 second latency mark. Most companies aim for initial load of 1s. After initial load, people will perceive elements of the site as ‘functional’ if they return in 200 ms or less. Traversing the web in the US from coast to coast is ~50 ms spent already, so actually doing something with the users data has to be very performant. Outside the US, especially in india-- the latency is pretty terrible. Because of connection maps through different vendors, sometimes you’ll have a situation where one half of a city has very fast times and the other half terrible. Same for mobile networks. Its absolutely the unregulated wild west out there and users dont see it. There’s also bad actors + accidental misconfigs and bad planning all over the place.

So, having both data centers and tons of mini data centers called POPS (points of presence) near large cities are how the internet stays fast. These POPS handle the initial connection and establishing encryption between user and data center application, figure out some best steering of traffic sometimes, and sometimes serve some cacheable info.

Another thing that keeps sites fast are Content delivery services, or CDNs. CDN companies sell their services to other companies on the internet. Their value is in maintaining a high number of POPs and data centers to store your highly cacheable content that changes pretty seldomly, like your profile picture in LinkedIn or facebook. All CDNs do is serve up cached files quickly, using many data ceters and a large number of pops. So when you load up a web page, its fetching stuff not just from the company who owns the site, but from CDNs, and often third party websites as well like trackers, servers that provide fonts and pictures. Its a huge mess.

Video and voice is the new frontier of this stuff, and its more complication that sits on top of the previous complication. It demands low latency all the time, so a lot of it is deployed in POPs or via peer to peer connections when possible.

All that wasted energy for products no one asked for that are objectively trash.

100%. I work in tech and my entire career has not benefitted the public in any way. Its often even actively harmful. If someone knocked out the entire internet somehow and handed me a rake, the world would be a better more productive place, and psychologists say we’d all be happier.

With AI deployments continuing to increase, US data center energy grew at an increasing rate, hitting 176TWh by 2023, representing 4.4 percent of total US electricity consumption

The cloud bad. It was all a lie. Self hosting is more energy efficient and cost effective

Private cloud is better than public cloud for different goals.

Here, though, DC costs can be swept under the rug.

OPex (operational expende\iture) vs CAPex (capital expenditures) reporting rules steer companies toward going into the cloud, which is classified as Opex. Running your own DC is classified as capex. Capex makes shareholders very unhappy when expensive investments in infrastructure are bought. If the government wanted to, it could change the rules such that cloud company fees counted as CAPex, and the cloud companies would drastically shrink.

OPex (operational expendeture) vs CAPex (capital expenditures) reporting rules steer companies toward going into the cloud, which is classified as Opex. Running your own DC is classified as capex. Capex makes shareholders very unhappy when expensive investments in infrastructure are bought. If the government wanted to, it could change the rules such that cloud company fees counted as CAPex, and the cloud companies would drastically shrink.

The cloud wasn’t the worst idea when storage was pricey. These days, I can get a 2 TB SSD for $100 and download a ton of movies and TVs and games and still take months to fill it up. A cursory web search tells me a 2 TB SSD can hold over 1000 1080p movies.

And if you’re buying a bunch of really big games, you weren’t going to put them on the cloud to begin with even when cloud storage made a bit of sense.

Have no fear, Except for Texas with its mismanaged power grid, we have coal plants we can turn back on to take care of that. And coal miners we were retraining for other jobs can stop that retraining and get back to work in the coal mines. The US has an a functionally infinite supply of coal, so everyone just calm down.

The cat pics and government propoganda stream will continue to flow even if we have to turn our earth into a flaming greenhouse gasball to do it.

More power! We need more power for what no one wanted!